| Title |

Description |

Lab |

Bib |

Video |

|

|

Robot@CWE |

This is the Final demonstrator of the FP7 IP Robot@CWE project. |

JRL-Japan

|

|

|

|

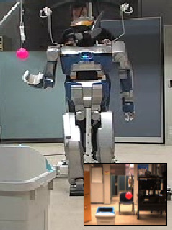

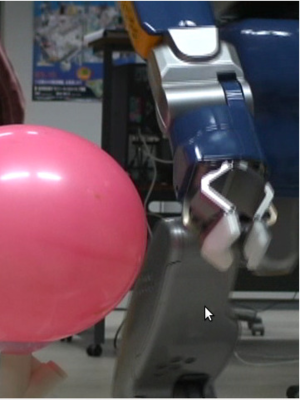

Grasping while walking |

Grasping a ball with visual guidance while walking. First demonstration with the presented framework during the end of Nicolas Mansard's PhD |

JRL-Japan

|

|

|

|

Open the fridge |

The robot opens a fridge to grasp a can. The motion results from an optimized task sequence. The motion was implemented by F. Keith during his PhD thesis. |

JRL-Japan

|

|

|

|

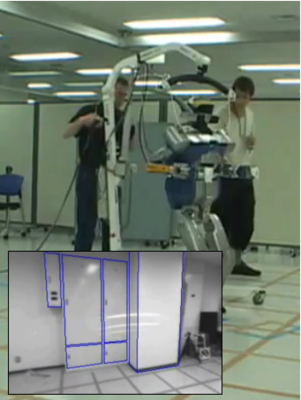

Haptic communication |

Study about the haptic (non verbal) communication between two human partners during a task implying physical collaboration. The resulting strategies are applied to drive the humanoid robot decisions. The motion was programmed by A. Bussy during his PhD thesis. |

JRL-Japan

|

|

|

|

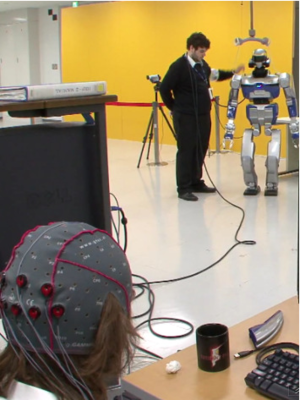

Brain computer interface |

The robot is driven by symbolic orders that are selected by a brain-computer interface. The orders are converted into tasks that are applied by the robot. The demonstration was developped by P. Gergondet during his PhD thesis. It is one of the main demonstrators of the FP7 IP VERE project. |

JRL-Japan

|

|

|

|

Active vision |

The robot autonomously selects the most relevant poses to improve the 3D reconstruction of an ob ject. The built model is then used for the "Treasure hunting" project, led by O. Stasse [Saidi 07]. The active-search movements have been develop ed by T. Foissote during his PhD thesis. |

JRL-Japan

|

|

|

|

Joystick-controlled walk |

First application of the "joystick-drive" walking pattern generator. The COM tra jectory is the closest to the velo city input given by the joystick [Herdt 10]. The footprints are computed during the COM trajectory optimization and bounded to stay inside a security zone computed during a learning process [Stasse 09]. |

JRL-Japan

INRIA

|

|

|

|

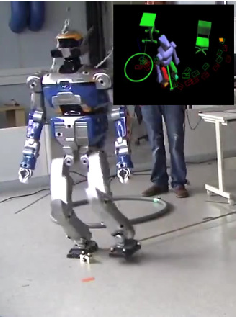

Visual servoing of the walk |

Similarly to the previous item, the robot COM and footprints are computed by model-predictive control. This time, the robot velocity is input by the camera feedback, following a visual-servoing scheme. |

JRL-Japan

|

|

|

|

Obstacle avoidance |

The obstacles are locally taken into account in the control scheme. The task used to prevent the collision is based on a smooth body envelope [Escande 07]. |

JRL-Japan

|

|

|

|

Skin for humanoid robots |

Several tactile and proximetric sensor cells are attached to the HRP-2 robot cover. They are used to guide the robot and teach by showing how to grasp various classes of ob ject using whole-body grasps. |

JRL-Japan

TUM

|

|

|

| Title |

Description |

Lab |

Bib |

Video |

|

|

Pursuit-evasion planning |

A navigation trajectory is computed while taking into account the robot visibility constraints. The plan is then executed using the walking pattern generator and the proposed whole-body resolution scheme. |

JRL-Japan

CIMAT

|

|

|

|

Bi-manual visual servoing |

The head and both arms are controlled following a 2D visual feedback. |

LAAS

LASMEA

|

|

|

|

Fast footstep replanning |

Using fast feasibility tests, the footsteps leading to a goal position are recomputed on the light to track modifications of the environment. The footstep plan is then executed by inverse kinematics |

LAAS

JRL-Japan

|

|

|